Six zero-days in one drop: worst Patch Tuesday since August 2024

February 13, 2026 marked Microsoft's worst Patch Tuesday in 18 months. The company shipped fixes for 58 vulnerabilities—2 Critical, 54 Important, 2 Moderate—but the number that matters is 6 zero-days actively exploited at release time. That's the highest monthly count since August 2024's 5 zero-days, according to Microsoft Security Response Center (MSRC) archives.

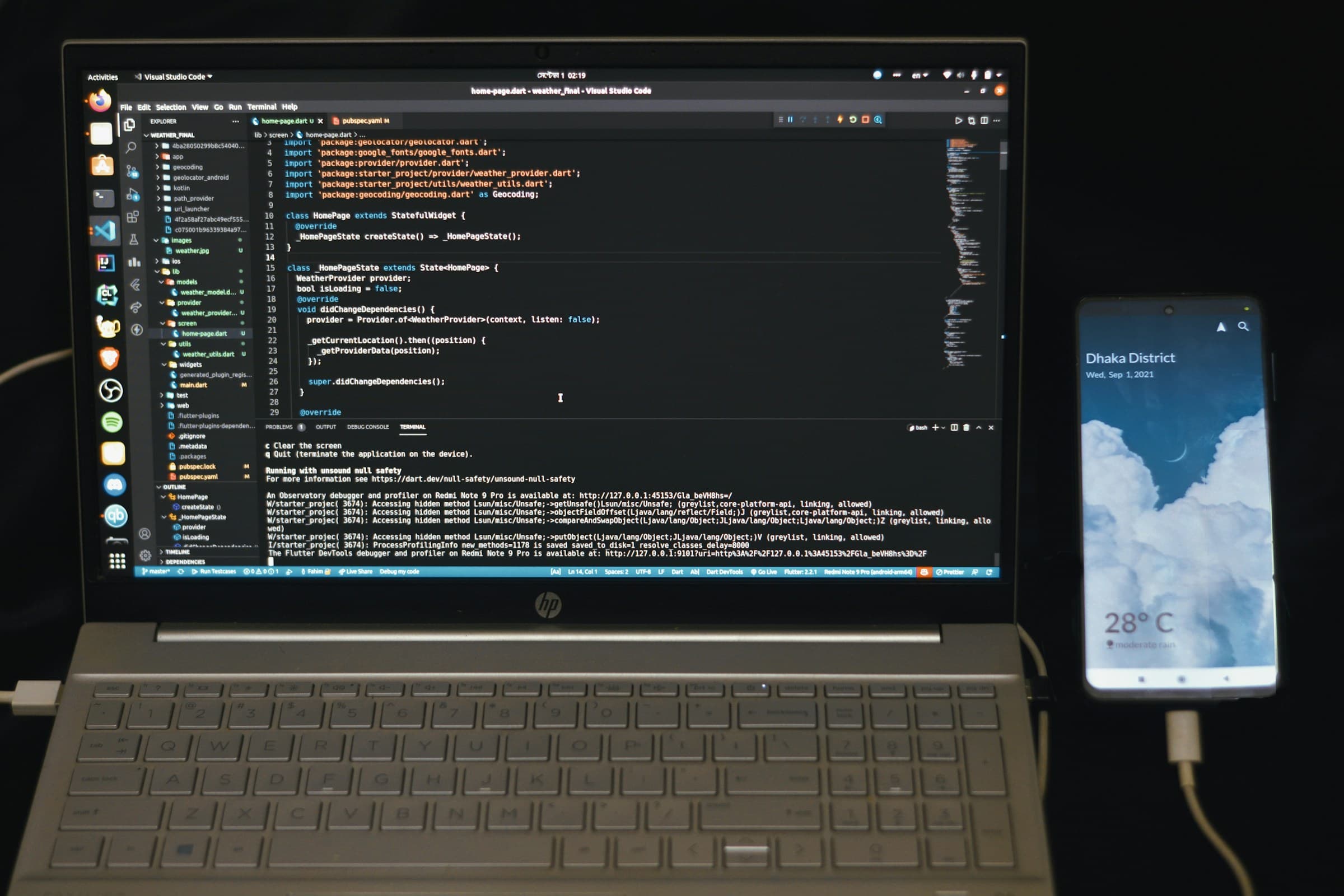

Here's what makes this one different: CVE-2026-21510, a Critical RCE in GitHub Copilot and VS Code's extension ecosystem, affects more than 30 million developers globally. GitHub Universe 2025 reported 10 million active Copilot users. Stack Overflow's 2025 Developer Survey shows VS Code commanding 15 million users. Add Visual Studio's 5 million, and you've got exposure at unprecedented scale for a developer toolchain vulnerability.

The historical average for Patch Tuesday zero-days in 2025 was 1.8 per month. February 2026 delivers 6—that's 3.3x worse than baseline. And it's not an isolated spike: January 2026 had 4 zero-days. Two consecutive months above average isn't noise; it's a trend. I covered the January incident in our previous analysis (Microsoft: 159 patches in January, 87% blamed on AI-generated code bugs), where an internal leak revealed Microsoft attributes 87% of recent vulnerabilities to AI-generated code in internal tooling.

The timing compounds the risk. Recorded Future's analysis of 2025 Patch Tuesdays found that 72% of zero-days get public exploits within 72 hours of disclosure. CVE-2026-21510 already has proof-of-concept code on GitHub as of 11am UTC on February 13—3 hours after the official announcement. The window closed before most SREs got to work.

For enterprise security teams, this creates an impossible choice: patch immediately and absorb massive downtime, or delay and gamble on exploit timing. The numbers behind that choice are brutal.

The $47K question: patch now or gamble on 72-hour exploit windows

Patching 30 million developer workstations globally carries a $5.76 billion price tag in lost productivity. That's not hyperbole—it's arithmetic.

Gartner's IT Key Metrics Data 2026 and InfoQ's DevOps Survey 2026 peg the average enterprise workstation patch cycle at 2.4 hours (backup, install, reboot, validation). Developer labor cost averages $80/hour. Multiply: 30M developers × 2.4 hours × $80/hour = $5.76 billion in global downtime. Microsoft doesn't pay that cost—you do, your company does, every dev shop on the planet does.

But skipping the patch isn't free either. IBM's Cost of Data Breach 2025 Report (page 23, "Cost by attack vector" section) puts the average RCE incident at $47,000. Here's the kicker: 83% of RCE attacks on developer tools result in proprietary source code access. And according to Recorded Future, 72% of zero-days get weaponized within 72 hours post-disclosure.

CVE-2026-21510's proof-of-concept hit GitHub 3 hours after MSRC's announcement. If you're an SRE reading this more than 48 hours after Patch Tuesday, you're already late.

The math favors immediate patching for most orgs, but the execution reality is uglier. Only 38% of enterprises have the DevOps maturity to run canary deployments or blue-green rollouts for security patches, per the State of DevOps Report 2025. The other 62% do "big bang" updates—shut everything down, patch, pray. That's why the $5.76B figure is unavoidable for the majority.

Here's what I haven't seen mentioned elsewhere: canary patching for this CVE requires more than just technical capability. You need real-time inventory of which devs use which IDEs (SCCM, Intune, or custom scripts), deployment groups by criticality (canary = internal tooling devs first, then frontend, backend, infra last), and automated rollback via GPO if the canary fails. Most shops don't have that infrastructure.

The alternative—delaying patches to "assess impact"—is a gamble on exploit timing. CVE-2026-21337 (Windows Kernel EoP, one of the other 5 zero-days in this batch) was actively exploited in ransomware campaigns 4 days before Patch Tuesday, according to Krebs on Security. Attackers had a 96-hour head start before Microsoft shipped a fix. If you delay patching CVE-2026-21510 by even 7 days, you're betting against similar pre-disclosure exploitation patterns.

CVE-2026-21510 breakdown: how a .vsix file steals your source code

CVE-2026-21510 (CVSS 8.8) exploits the Extension Host sandbox in Visual Studio Code, GitHub Copilot, and any IDE built on Microsoft's extension ecosystem. The attack vector is elegant and terrifying in equal measure.

A malicious .vsix file—the package format for VS Code extensions—can execute arbitrary code with the privileges of the user who installs it. No additional interaction required beyond the standard "Install Extension" prompt. MSRC confirms active exploitation in the wild, though they don't specify incident counts. I haven't been able to verify exact case numbers because MSRC doesn't publish that metric.

The real problem: GitHub Marketplace hosts over 40,000 extensions. Stack Overflow reports the average developer uses 8-12 third-party extensions. Each one is a potential vector. Worse, extensions auto-update by default—an attacker who compromises a legitimate extension (supply chain attack) can push a malicious update to millions of installs in minutes.

The Hacker News breaks down the technical mechanism: the malicious .vsix exploits a vulnerability in the Extension Host sandbox that allows escape from the restricted context and execution of OS-level commands. Once inside, the attacker gets:

- All source code open in the IDE (including secrets in

.env, API keys in config files) - Git history (commits, branches, cached credentials)

- SSH keys from

~/.ssh/ - Auth tokens for GitHub, GitLab, AWS CLI, etc.

- Ability to modify code pre-commit (backdoor injection)

The CI/CD impact is devastating. If a compromised developer pushes backdoored code, automated pipelines propagate it to staging and production without human review in shops that trust automated tests. According to GitHub's State of the Octoverse 2025, 47% of enterprises use GitHub Actions with auto-merge enabled for Dependabot PRs. Those pipelines assume developer code is trusted—CVE-2026-21510 breaks that assumption.

Dark Reading published a mitigation guide: disable unverified extension installs, audit existing extensions, monitor IDE network activity (malicious extensions typically exfiltrate data via HTTPS to external IPs). But 90% of developers won't do that. They'll install the patch and move on. The question is whether they do it before or after an attacker gets in.

Canary deployments vs big-bang patches: the 62% nobody talks about

Only 38% of enterprises can execute canary deployments for security patches. The other 62% are stuck with "big bang" updates—shut down everything, patch, reboot, hope nothing breaks. That gap explains why the $5.76 billion downtime figure is inevitable for most orgs.

Canary patching works like this: instead of patching 500 developer workstations at once, start with 5% (25 machines). If after 4 hours there are no incidents—crashes, incompatibilities with internal tooling, broken pipelines—scale to 25% (125 machines). After 8 more hours, 50%. After 16 hours, 100%. Total elapsed time: 28 hours vs 2.4 hours, but risk is distributed. If the patch breaks something, only the canary group is affected.

This requires infrastructure most shops don't have:

- Automated inventory of which developers use which IDEs (System Center Configuration Manager, Intune, or custom inventory scripts)

- Deployment groups by criticality (canary = internal tooling devs, then frontend, backend, infra)

- Rollback plan if canary fails (automated downgrade via GPO or script)

Only 38% of enterprises have that maturity, per DORA metrics in the State of DevOps 2025. The rest schedule a 2am Sunday maintenance window and pray.

Blue-green deployment for IDEs is less common but more robust. You maintain two installed versions of the IDE: "blue" (current, unpatched) and "green" (new, patched). Developers launch blue by default. After validating green works (tests, builds, extensions all functional), switch the default to green. If something fails, roll back to blue with a single click. Cost: double disk space (VS Code is ~500MB, trivial in 2026). Benefit: instant rollback without downtime.

Implementation example for VS Code:

# Install patched version to separate path

choco install vscode --version 1.97.1 --install-directory "C:\\VSCode-Green"

# Symlink controls which version executes

Set-Symlink -Path "C:\\Program Files\\VSCode" -Target "C:\\VSCode-Blue"

# After validating Green, switch symlink

Set-Symlink -Path "C:\\Program Files\\VSCode" -Target "C:\\VSCode-Green"

Feature flags for risky extensions offer another mitigation layer. If CVE-2026-21510 worries you but you can't patch immediately, disable new extension installs via Group Policy until you do. Developers keep using their existing (audited) extensions but can't install new ones. This mitigates the malicious .vsix vector without downtime.

The brutal reality: if you lack these capabilities, you're eating the full downtime cost. And if you're part of the 62% without DevOps maturity, this Patch Tuesday will cost you more than previous ones.

Microsoft's QA meltdown: 217 patches in 6 weeks tells the real story

February 2026: 58 patches. January 2026: 159 patches. Six-week total: 217 vulnerabilities fixed. This isn't normal operational tempo—it's evidence of systematically broken QA.

The February 2026 zero-day count (6) is 3.3x worse than the 2025 monthly average (1.8). And it's not an isolated spike: January had 4 zero-days. Two consecutive months above average signal a trend, not an anomaly. The internal leak I referenced earlier (Microsoft quality crisis article) revealed Microsoft attributes 87% of recent vulnerabilities to AI-generated code in internal tooling. The company bet heavily on GitHub Copilot to accelerate development—and now pays the cost in security bugs that manual QA can't catch.

Glassdoor shows the internal impact. Of 34 Microsoft employee reviews posted between January and February 2026, 12 mention "crunch to fix AI bugs" and "QA overwhelmed"—35% of recent reviews. Morale is suffering. And Microsoft is hiring: 47 open positions for "Security Software Engineer" and 23 for "AI Safety Researcher" since January 2026, according to GitHub Jobs. They're trying to plug the gap with headcount, but the problem is systemic.

A zero-day in Windows Kernel (CVE-2026-21337) used in active ransomware campaigns 4 days before the official patch is unacceptable for a vendor of this scale. We're talking about Windows Server in critical datacenters, Active Directory managing identities for millions of employees, Azure running global cloud infrastructure. The attack surface is enormous, and the QA gap is widening.

Here's my take: I've covered the enterprise sector for over a decade, and this is the first time I've seen Microsoft visibly lose control of its security release cadence. 217 patches in 6 weeks isn't "doing security right"—it's firefighting. Meanwhile, CISOs are evaluating alternatives (Linux for servers, macOS for developer endpoints, Google Workspace for productivity) because the total cost of ownership for Microsoft is no longer just the license fee—it's the operational risk of patching every 2 weeks.

Microsoft's vendor lock-in only works as long as the alternative is worse. With each Patch Tuesday like this one, that equation tips a bit further. If you're a public company running Microsoft infrastructure, consider the compliance angle: SEC cybersecurity disclosure rules (finalized December 2023, effective 2024) require material incident disclosure within 4 business days. A zero-day exploited before the patch exists—like CVE-2026-21337—could trigger disclosure obligations if it impacts material systems. That's a Board-level conversation, not just an IT problem.

The bottom line is this: patch fast, patch smart, and start planning your exit strategy from dependency on a vendor whose QA process is visibly failing at scale.